# Import required packages

import os

import pandas as pd

import matplotlib.pyplot as pltIn today’s blog post, we’ll go through the “Introduction to PyTorch” tutorial available from PyTorch’s online learning community.

In a future blog post, I will take the foundation developed through this walkthrough and explore a modified data analysis workflow, with a more complicated neural network and additional hyperparameter training for a different set of data.

With background out of the way, let’s get started~

```{r}

library(reticulate)

use_python('/opt/anaconda3/bin/python')

```Most machine learning workflows involve uploading data, creating models, optimizing model parameters, and performing predictions on input data. This tutorial introduces you to a complete ML workflow implemented in PyTorch.

In PyTorch, we use tensors to encode the inputs and outputs of a model, as well as the model’s parameters. Tensors are a specialized data structure that are very similar to arrays and matrices.

import torch

from torch import nn

from torch.utils.data import Dataset

from torch.utils.data import DataLoader#import torchvision

from torchvision import datasets, transforms

from torchvision.transforms import ToTensor, Lambda

from torchvision.io import read_imagefrom torch_geometric.datasets import BrcaTcgadataset = BrcaTcga(root='/Users/vsriram/Developer/vsriram24.github.io/posts/pytorch-brca/')/Users/vsriram/.virtualenvs/r-reticulate/lib/python3.12/site-packages/torch_geometric/data/dataset.py:238: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

if osp.exists(f) and torch.load(f) != _repr(self.pre_transform):

/Users/vsriram/.virtualenvs/r-reticulate/lib/python3.12/site-packages/torch_geometric/data/dataset.py:246: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

if osp.exists(f) and torch.load(f) != _repr(self.pre_filter):

/Users/vsriram/.virtualenvs/r-reticulate/lib/python3.12/site-packages/torch_geometric/io/fs.py:215: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

return torch.load(f, map_location)len(dataset)

dataset.num_classes

dataset.num_node_features/Users/vsriram/.virtualenvs/r-reticulate/lib/python3.12/site-packages/torch_geometric/data/dataset.py:169: UserWarning: Found floating-point labels while calling `dataset.num_classes`. Returning the number of unique elements. Please make sure that this is expected before proceeding.

warnings.warn("Found floating-point labels while calling "1data = dataset[0]

data

data.is_undirected()FalseWe start by checking to see if we can train our model on a hardware accelerator like the GPU or MPS if available. Otherwise, we’ll use the CPU.

# Get cpu, gpu or mps device for training.

device = (

"cuda"

if torch.cuda.is_available()

else "mps"

if torch.backends.mps.is_available()

else "cpu"

)

print(f"Using {device} device")Using mps deviceCode for processing data samples can get messy and hard to maintain. We ideally want our dataset code to be de-coupled from model training code for better readability and modularity. We will break our analysis into the following two sections: Data and Modeling.

Data

Importing and transforming the data

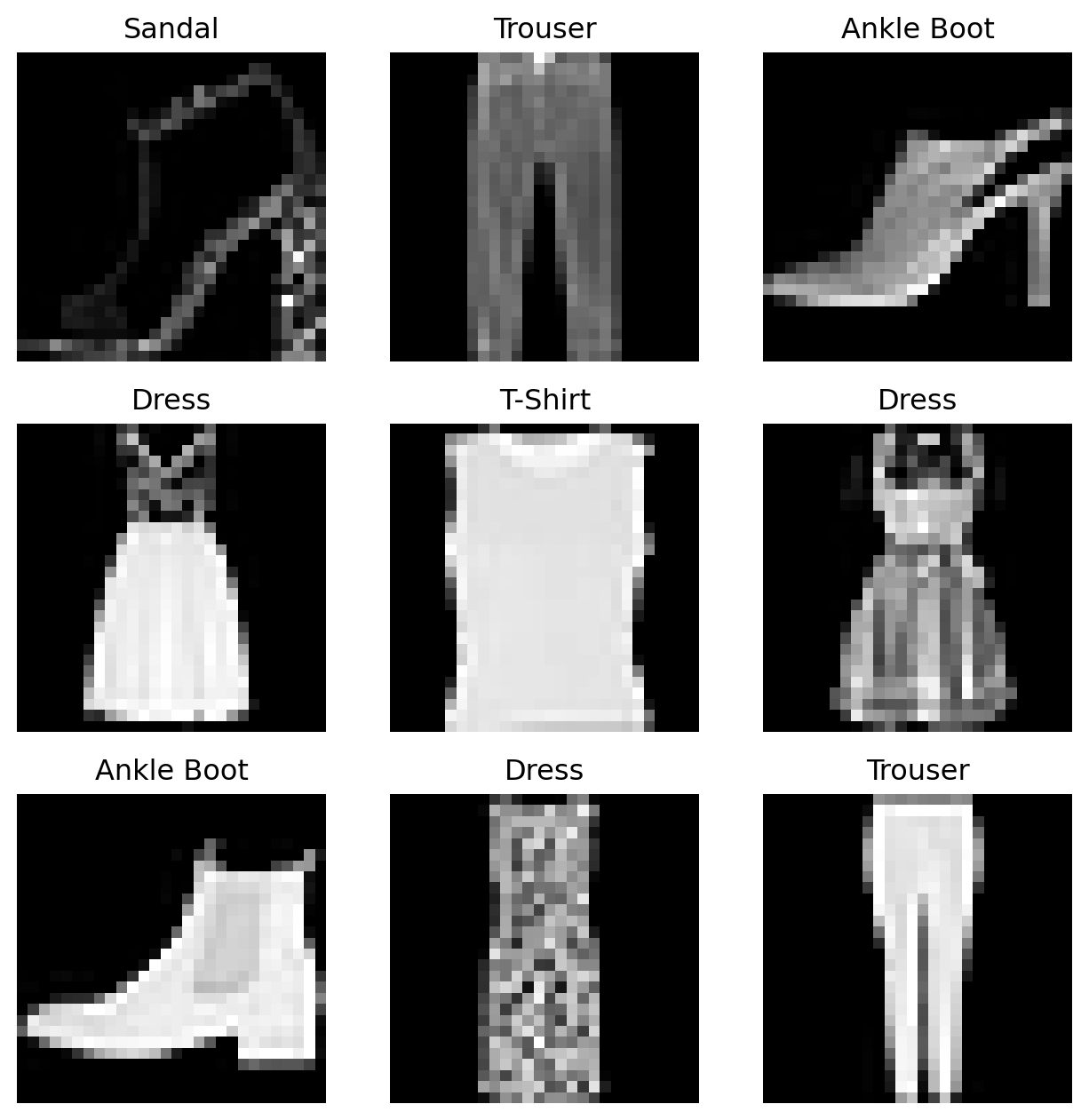

PyTorch offers domain-specific libraries such as TorchText, TorchVision, and TorchAudio. In this tutorial, we will be using a TorchVision dataset. The torchvision.datasets module contains Dataset objects for many real-world vision data. Here, we will use the FashionMNIST dataset. Fashion-MNIST is a dataset of images consisting of 60,000 training examples and 10,000 test examples. Each example includes a 28x28 grayscale image and an associated label from one of 10 classes.

Each PyTorch Dataset stores a set of samples and their corresponding labels. Data do not always come in the final processed form that is required for training ML algorithms. We use transforms to perform some manipulation of the data and make it suitable for training. Every TorchVision Dataset includes 2 arguments: transform to modify the samples and target_transform to modify the labels.

The FashionMNIST features are in PIL Image format. For training, we need the features as normalized tensors, and the labels as one-hot encoded tensors. To make this transformation, we use the ToTensor() function. ToTensor() converts a PIL image or NumPy ndarray into a FloatTensor and scales the image’s pixel intensity values in the range [0., 1.]

# Download training data from open datasets.

training_data = datasets.FashionMNIST(

root="data",

train=True,

download=True,

transform=ToTensor()

)

# Download test data from open datasets.

test_data = datasets.FashionMNIST(

root="data",

train=False,

download=True,

transform=ToTensor()

)We now have our data uploaded! We can index an input Dataset manually (i.e. training_data[index]) to get individual samples. Here, we use matplotlib to visualize some samples in our training data.

labels_map = {

0: "T-Shirt",

1: "Trouser",

2: "Pullover",

3: "Dress",

4: "Coat",

5: "Sandal",

6: "Shirt",

7: "Sneaker",

8: "Bag",

9: "Ankle Boot",

}

figure = plt.figure(figsize=(8, 8))

cols, rows = 3, 3

for i in range(1, cols * rows + 1):

sample_idx = torch.randint(len(training_data), size=(1,)).item()

img, label = training_data[sample_idx]

figure.add_subplot(rows, cols, i)

plt.title(labels_map[label])

plt.axis("off")

plt.imshow(img.squeeze(), cmap="gray")

plt.show()

Preparing data for training with DataLoaders

While training a model, we typically want to pass samples in “minibatches”, reshuffle the data at every epoch to reduce model overfitting, and use Pythonic multiprocessing to speed up data retrieval. DataLoader is an iterable that abstracts this complexity for us in an easy API. We pass our input Dataset as an argument to DataLoader. This wraps an iterable over our dataset, supporting automatic batching, sampling, shuffling, and multiprocess data loading in the process.

Here we define a batch size of 64 - each element in the DataLoader iterable will return a batch of 64 features and labels.

batch_size = 64

# Create data loaders.

train_dataloader = DataLoader(training_data, batch_size=batch_size, shuffle=True)

test_dataloader = DataLoader(test_data, batch_size=batch_size, shuffle=True)

for X, y in test_dataloader:

print(f"Shape of X [N, C, H, W]: {X.shape}")

print(f"Shape of y: {y.shape} {y.dtype}")

breakShape of X [N, C, H, W]: torch.Size([64, 1, 28, 28])

Shape of y: torch.Size([64]) torch.int64Iterating over the DataLoader

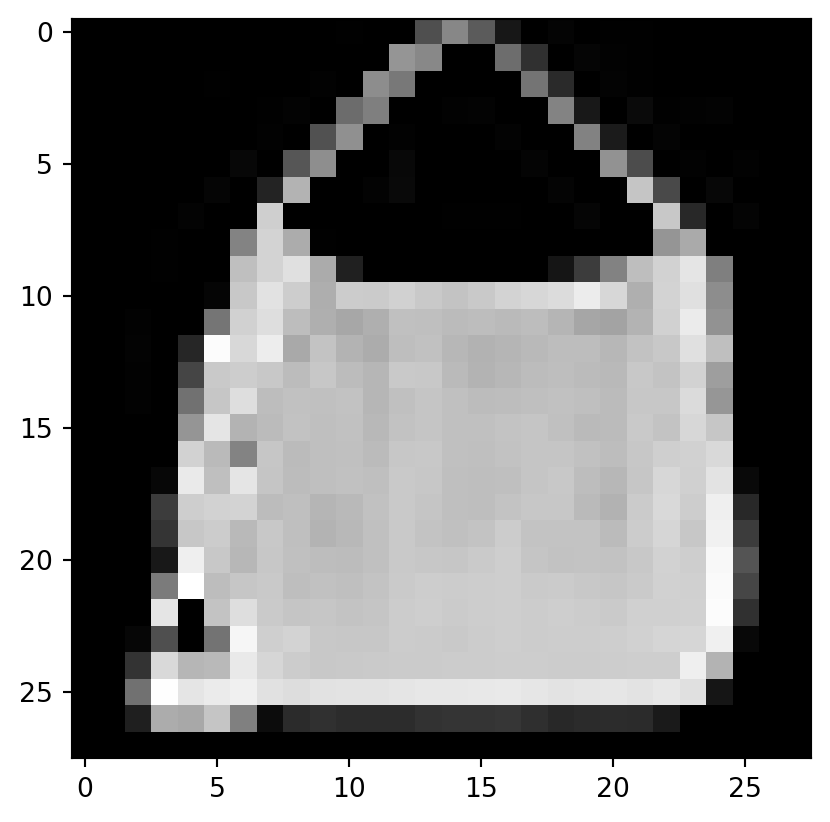

Now that we have loaded our data into a DataLoader, we can iterate through the dataset as needed (using next(iter(DataLoader)). Each iteration returns a batch of train_features and train_labels (containing batch_size=64 features and labels respectively). Because we specified shuffle=True, the data are shuffled after we iterate over all of our batches.

# Display image and label.

train_features, train_labels = next(iter(train_dataloader))

print(f"Feature batch shape: {train_features.size()}")

print(f"Labels batch shape: {train_labels.size()}")

img = train_features[0].squeeze()

label = train_labels[0]

plt.imshow(img, cmap="gray")

plt.show()

print(f"Label: {label}")Feature batch shape: torch.Size([64, 1, 28, 28])

Labels batch shape: torch.Size([64])

Label: 8

Modeling

Defining a neural network

Neural networks comprise of layers/modules that perform operations on data. The torch.nn namespace provides all the building blocks you need to build your own neural network. Every module in PyTorch subclasses the nn.Module. A neural network is a module itself that consists of other modules (layers). This nested structure allows for building and managing complex architectures easily.

To define a neural network in PyTorch, we create a class that inherits from nn.Module. We define the layers of the network in the __init__ function and specify how data will pass through the network in the forward function.

# Define model

class NeuralNetwork(nn.Module):

def __init__(self):

super().__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(28*28, 512),

nn.ReLU(),

nn.Linear(512, 512),

nn.ReLU(),

nn.Linear(512, 10)

)

def forward(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logitsNow that we’ve defined the structure of our NeuralNetwork, we can create an instance of it and move it to a faster device (GPU or MPS) if available to accelerate operations. We can also print its structure to see the layers that we’ve just defined.

model = NeuralNetwork().to(device)

print(f"Model structure: {model}")Model structure: NeuralNetwork(

(flatten): Flatten(start_dim=1, end_dim=-1)

(linear_relu_stack): Sequential(

(0): Linear(in_features=784, out_features=512, bias=True)

(1): ReLU()

(2): Linear(in_features=512, out_features=512, bias=True)

(3): ReLU()

(4): Linear(in_features=512, out_features=10, bias=True)

)

)Many layers inside a neural network are parameterized, meaning that they have associated weights and biases that are optimized during training. Subclassing nn.Module automatically tracks all fields defined inside your model object, and makes all parameters accessible using your model’s parameters() or named_parameters() methods.

The linear layer is a module that applies a linear transformation on the input using its stored weights and biases. Non-linear activations are what create the complex mappings between the model’s inputs and outputs. They are applied after linear transformations to introduce nonlinearity, helping neural networks learn a wide variety of phenomena.

In this model, we use nn.ReLU between our linear layers, but there are other options for activations to introduce non-linearity in your model.

for name, param in model.named_parameters():

print(f"Layer: {name} | Size: {param.size()} | Values : {param[:2]} \n")Layer: linear_relu_stack.0.weight | Size: torch.Size([512, 784]) | Values : tensor([[-0.0137, 0.0180, -0.0038, ..., -0.0288, 0.0247, 0.0047],

[-0.0027, -0.0064, 0.0060, ..., 0.0168, 0.0072, 0.0185]],

device='mps:0', grad_fn=<SliceBackward0>)

Layer: linear_relu_stack.0.bias | Size: torch.Size([512]) | Values : tensor([-0.0209, 0.0113], device='mps:0', grad_fn=<SliceBackward0>)

Layer: linear_relu_stack.2.weight | Size: torch.Size([512, 512]) | Values : tensor([[ 0.0398, -0.0057, 0.0337, ..., 0.0392, 0.0286, 0.0139],

[-0.0359, 0.0057, 0.0314, ..., -0.0165, -0.0135, -0.0054]],

device='mps:0', grad_fn=<SliceBackward0>)

Layer: linear_relu_stack.2.bias | Size: torch.Size([512]) | Values : tensor([-0.0152, -0.0237], device='mps:0', grad_fn=<SliceBackward0>)

Layer: linear_relu_stack.4.weight | Size: torch.Size([10, 512]) | Values : tensor([[ 0.0041, 0.0418, 0.0345, ..., -0.0015, -0.0423, -0.0096],

[-0.0395, 0.0183, -0.0321, ..., 0.0371, -0.0126, -0.0107]],

device='mps:0', grad_fn=<SliceBackward0>)

Layer: linear_relu_stack.4.bias | Size: torch.Size([10]) | Values : tensor([ 0.0070, -0.0366], device='mps:0', grad_fn=<SliceBackward0>)

Optimizing the Model Parameters

Now that we have our data and our model, it’s time to train, validate and test our model by optimizing its parameters on the input data! Training a model is an iterative process; in each iteration the model makes a guess about the output, calculates the error in its guess (loss), collects the derivatives of the error with respect to its parameters, and optimizes these parameters using gradient descent.

Hyperparameters

Hyperparameters are adjustable parameters that let you control the model optimization process. Different hyperparameter values can impact model training and convergence rates.

We define the following hyperparameters for training: - Number of Epochs - the number times to iterate over the dataset - Batch Size - the number of data samples propagated through the network before the parameters are updated - Learning Rate - how much to update models parameters at each batch/epoch. Smaller values yield slow learning speed, while large values may result in unpredictable behavior during training.

learning_rate = 1e-3

batch_size = 64

epochs = 5Optimization Loop

To train our model, we need a loss function as well as an optimizer.

Once we set our hyperparameters, we can then train and optimize our model with an optimization loop. Each iteration of the optimization loop is called an epoch.

Each epoch consists of two main parts: - The Train Loop - iterate over the training dataset and try to converge to optimal parameters. - The Validation/Test Loop - iterate over the test dataset to check if model performance is improving.

The loss function measures the degree of dissimilarity between an obtained result and the target value, and it is the loss function that we want to minimize during training. To calculate the loss we make a prediction using the inputs of our given data sample and compare it against the true data label value.

Common loss functions include nn.MSELoss (Mean Square Error) for regression tasks, and nn.NLLLoss (Negative Log Likelihood) for classification. nn.CrossEntropyLoss combines nn.LogSoftmax and nn.NLLLoss.

We pass our model’s output logits to nn.CrossEntropyLoss, which will normalize the logits and compute the prediction error.

# Initialize the loss function

loss_fn = nn.CrossEntropyLoss()Optimization is the process of adjusting model parameters to reduce model error in each training step. Optimization algorithms define how this process is performed (in this example we use Stochastic Gradient Descent). All optimization logic is encapsulated in the optimizer object. Here, we use the SGD optimizer; additionally, there are many different optimizers available in PyTorch such as ADAM and RMSProp, that work better for different kinds of models and data.

We initialize the optimizer by registering the model’s parameters that need to be trained, and passing in the learning rate hyperparameter.

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)Inside the training loop, optimization happens in three steps: - Call optimizer.zero_grad() to reset the gradients of model parameters. Gradients by default add up; to prevent double-counting, we explicitly zero them at each iteration. - Backpropagate the prediction loss with a call to loss.backward(). PyTorch deposits the gradients of the loss w.r.t. each parameter. - Once we have our gradients, we call optimizer.step() to adjust the parameters by the gradients collected in the backward pass.

Full Implementation

We define train_loop that loops over our optimization code, and test_loop that evaluates the model’s performance against our test data.

def train_loop(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset)

# Set the model to training mode - important for batch normalization and dropout layers

# Unnecessary in this situation but added for best practices

model.train()

for batch, (X, y) in enumerate(dataloader):

X = X.to(device)

y = y.to(device)

# Compute prediction and loss

pred = model(X)

loss = loss_fn(pred, y)

# Backpropagation

loss.backward()

optimizer.step()

optimizer.zero_grad()

if batch % 100 == 0:

loss, current = loss.item(), batch * batch_size + len(X)

print(f"loss: {loss:>7f} [{current:>5d}/{size:>5d}]")

def test_loop(dataloader, model, loss_fn):

# Set the model to evaluation mode - important for batch normalization and dropout layers

# Unnecessary in this situation but added for best practices

model.eval()

size = len(dataloader.dataset)

num_batches = len(dataloader)

test_loss, correct = 0, 0

# Evaluating the model with torch.no_grad() ensures that no gradients are computed during test mode

# also serves to reduce unnecessary gradient computations and memory usage for tensors with requires_grad=True

with torch.no_grad():

for X, y in dataloader:

X = X.to(device)

y = y.to(device)

pred = model(X)

test_loss += loss_fn(pred, y).item()

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

test_loss /= num_batches

correct /= size

print(f"Test Error: \n Accuracy: {(100*correct):>0.1f}%, Avg loss: {test_loss:>8f} \n")In a single training loop, the model makes predictions on the training dataset (fed to it in batches), and then backpropagates the prediction error to adjust the model’s parameters.

We can also check the model’s performance against the test dataset to ensure it is learning.

The training process is conducted over several iterations (epochs). During each epoch, the model learns parameters to make better predictions. We print the model’s accuracy and loss at each epoch; we’d like to see the accuracy increase and the loss decrease with every epoch.

for t in range(epochs):

print(f"Epoch {t+1}\n-------------------------------")

train_loop(train_dataloader, model, loss_fn, optimizer)

test_loop(test_dataloader, model, loss_fn)

print("Done!")Epoch 1

-------------------------------

loss: 2.313123 [ 64/60000]

loss: 2.285968 [ 6464/60000]

loss: 2.279950 [12864/60000]

loss: 2.261608 [19264/60000]

loss: 2.234406 [25664/60000]

loss: 2.228365 [32064/60000]

loss: 2.181631 [38464/60000]

loss: 2.178308 [44864/60000]

loss: 2.168746 [51264/60000]

loss: 2.135072 [57664/60000]

Test Error:

Accuracy: 36.4%, Avg loss: 2.136111

Epoch 2

-------------------------------

loss: 2.136322 [ 64/60000]

loss: 2.113021 [ 6464/60000]

loss: 2.088278 [12864/60000]

loss: 2.043051 [19264/60000]

loss: 2.046329 [25664/60000]

loss: 2.025762 [32064/60000]

loss: 1.939848 [38464/60000]

loss: 1.940282 [44864/60000]

loss: 1.881336 [51264/60000]

loss: 1.876047 [57664/60000]

Test Error:

Accuracy: 56.8%, Avg loss: 1.832395

Epoch 3

-------------------------------

loss: 1.842130 [ 64/60000]

loss: 1.771711 [ 6464/60000]

loss: 1.715626 [12864/60000]

loss: 1.661744 [19264/60000]

loss: 1.599232 [25664/60000]

loss: 1.558062 [32064/60000]

loss: 1.587824 [38464/60000]

loss: 1.659741 [44864/60000]

loss: 1.540324 [51264/60000]

loss: 1.457054 [57664/60000]

Test Error:

Accuracy: 62.3%, Avg loss: 1.464174

Epoch 4

-------------------------------

loss: 1.465014 [ 64/60000]

loss: 1.422204 [ 6464/60000]

loss: 1.399371 [12864/60000]

loss: 1.378896 [19264/60000]

loss: 1.340550 [25664/60000]

loss: 1.292807 [32064/60000]

loss: 1.194880 [38464/60000]

loss: 1.318207 [44864/60000]

loss: 1.210237 [51264/60000]

loss: 1.319617 [57664/60000]

Test Error:

Accuracy: 63.7%, Avg loss: 1.218638

Epoch 5

-------------------------------

loss: 1.151209 [ 64/60000]

loss: 1.275276 [ 6464/60000]

loss: 1.121574 [12864/60000]

loss: 1.157526 [19264/60000]

loss: 1.165031 [25664/60000]

loss: 1.169642 [32064/60000]

loss: 1.062104 [38464/60000]

loss: 1.159328 [44864/60000]

loss: 1.061364 [51264/60000]

loss: 1.091813 [57664/60000]

Test Error:

Accuracy: 64.7%, Avg loss: 1.065644

Done!We can now use this model to make individual predictions.

To use the model, we pass it the input data. This executes the model’s forward, along with some background operations. Note that we do not call model.forward() directly!

classes = [

"T-shirt/top",

"Trouser",

"Pullover",

"Dress",

"Coat",

"Sandal",

"Shirt",

"Sneaker",

"Bag",

"Ankle boot",

]

model.eval()

x, y = test_data[0][0], test_data[0][1]

with torch.no_grad():

x = x.to(device)

pred = model(x)

predicted, actual = classes[pred[0].argmax(0)], classes[y]

print(f'Predicted: "{predicted}", Actual: "{actual}"')Predicted: "Ankle boot", Actual: "Ankle boot"Summary

This concludes my walkthrough of using basic PyTorch to process data and train a neural network. As mentioned, in a future post in the coming weeks, I will iterate on this pipeline, demonstrating a different neural network architecture and more data exploration for a different dataset. Until next time, [VS]Coders!